Connect Apache Spark

dbt-databricksIf you're using Databricks, the dbt-databricks adapter is recommended over dbt-spark. If you're still using dbt-spark with Databricks consider migrating from the dbt-spark adapter to the dbt-databricks adapter.

For the Databricks version of this page, refer to Databricks setup.

See Connect Databricks for the Databricks version of this page.

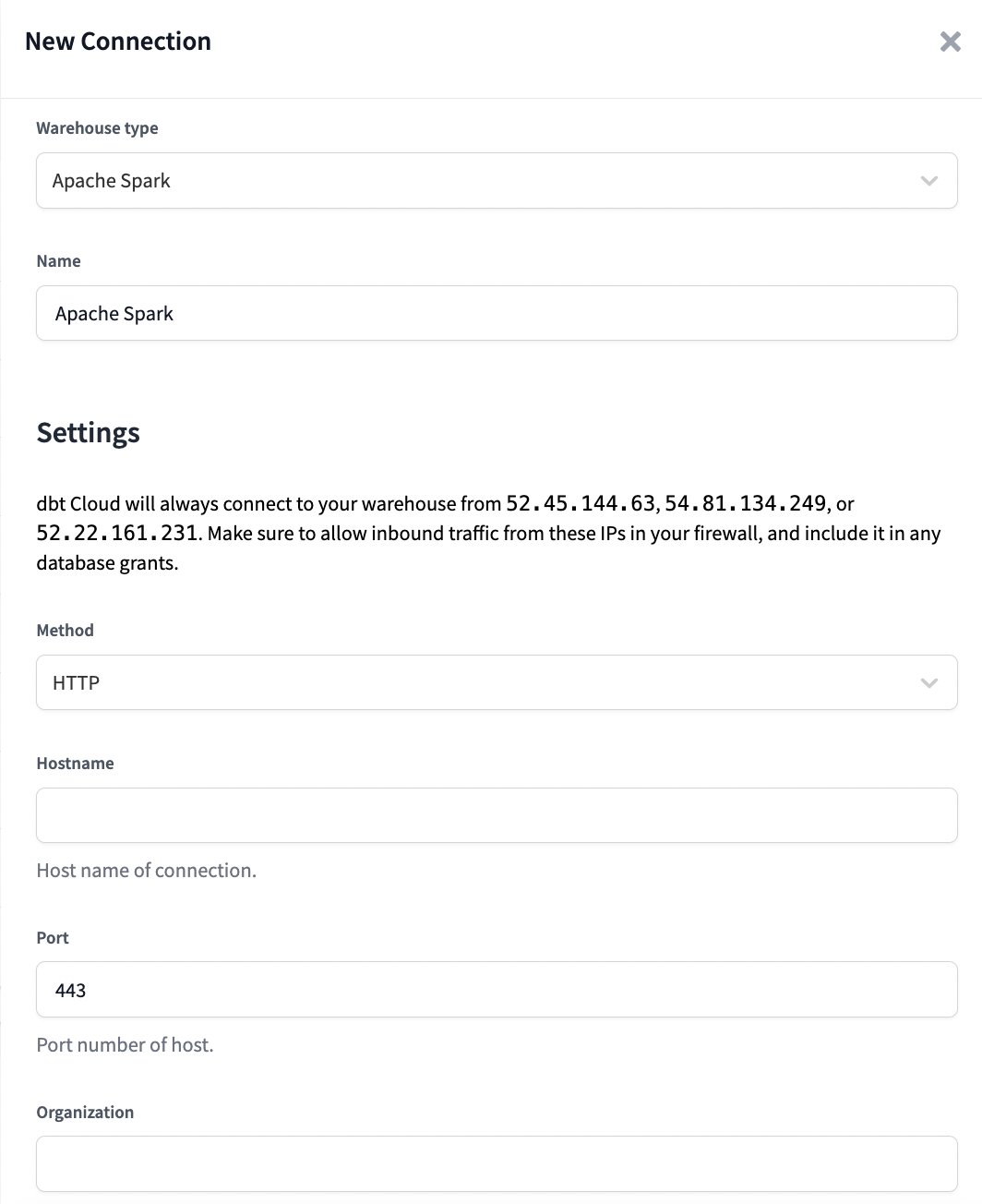

dbt Cloud supports connecting to an Apache Spark cluster using the HTTP method or the Thrift method. Note: While the HTTP method can be used to connect to an all-purpose Databricks cluster, the ODBC method is recommended for all Databricks connections. For further details on configuring these connection parameters, please see the dbt-spark documentation.

To learn how to optimize performance with data platform-specific configurations in dbt Cloud, refer to Apache Spark-specific configuration.

The following fields are available when creating an Apache Spark connection using the HTTP and Thrift connection methods:

| Field | Description | Examples |

|---|---|---|

| Host Name | The hostname of the Spark cluster to connect to | yourorg.sparkhost.com |

| Port | The port to connect to Spark on | 443 |

| Organization | Optional (default: 0) | 0123456789 |

| Cluster | The ID of the cluster to connect to | 1234-567890-abc12345 |

| Connection Timeout | Number of seconds after which to timeout a connection | 10 |

| Connection Retries | Number of times to attempt connecting to cluster before failing | 10 |

| User | Optional | dbt_cloud_user |

| Auth | Optional, supply if using Kerberos | KERBEROS |

| Kerberos Service Name | Optional, supply if using Kerberos | hive |